[ad_1]

You can reduce the overhead of file I/O by writing to the file in large blocks to reduce the number of individual write operations.

#define CHUNK_SIZE 4096

char file_buffer[CHUNK_SIZE + 64] ; // 4Kb buffer, plus enough

// for at least one one line

int buffer_count = 0 ;

int i = 0 ;

while( i < cnt )

{

buffer_count += sprintf( &file_buffer[buffer_count], "%d %d %d\n", a[i], b[i], c[i] ) ;

i++ ;

// if the chunk is big enough, write it.

if( buffer_count >= CHUNK_SIZE )

{

fwrite( file_buffer, buffer_count, 1, f ) ;

buffer_count = 0 ;

}

}

// Write remainder

if( buffer_count > 0 )

{

fwrite( file_buffer, buffer_count, 1, f ) ;

}

There may be some advantage in writing exactly 4096 bytes (or some other power of two) in a single write, but that is largely file-system dependent and the code to do that becomes a little more complicated:

#define CHUNK_SIZE 4096

char file_buffer[CHUNK_SIZE + 64] ;

int buffer_count = 0 ;

int i = 0 ;

while( i < cnt )

{

buffer_count += sprintf( &file_buffer[buffer_count], "%d %d %d\n", a[i], b[i], c[i] ) ;

i++ ;

// if the chunk is big enough, write it.

if( buffer_count >= CHUNK_SIZE )

{

fwrite( file_buffer, CHUNK_SIZE, 1, f ) ;

buffer_count -= CHUNK_SIZE ;

memcpy( file_buffer, &file_buffer[CHUNK_SIZE], buffer_count ) ;

}

}

// Write remainder

if( buffer_count > 0 )

{

fwrite( file_buffer, 1, buffer_count, f ) ;

}

You might experiment with different values for CHUNK_SIZE – larger may be optimal, or you may find that it makes little difference. I suggest at least 512 bytes.

Test results:

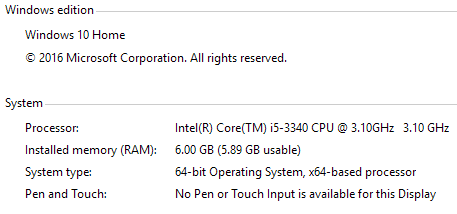

Using VC++ 2015, on the following platform:

With a Seagate ST1000DM003 1TB 64MB Cache SATA 6.0Gb/s Hard Drive.

The results for a single test writing 100000 lines is very variable as you might expect on a desktop system running multiple processes sharing the same hard drive, so I ran the tests 100 times each and selected the minimum time result (as can bee seen in the code below the results):

Using default “Debug” build settings (4K blocks):

line_by_line: 0.195000 seconds

block_write1: 0.154000 seconds

block_write2: 0.143000 seconds

Using default “Release” build settings (4K blocks):

line_by_line: 0.067000 seconds

block_write1: 0.037000 seconds

block_write2: 0.036000 seconds

Optimisation had a proportionally similar effect on all three implementations, the fixed size chunk write was marginally faster then the “ragged” chunk.

When 32K blocks were used the performance was only slightly higher and the difference between the fixed and ragged versions negligible:

Using default “Release” build settings (32K blocks):

block_write1: 0.036000 seconds

block_write2: 0.036000 seconds

Using 512 byte blocks was not measurably differnt from 4K blocks:

Using default “Release” build settings (512 byte blocks):

block_write1: 0.036000 seconds

block_write2: 0.037000 seconds

All the above were 32bit (x86) builds. Building 64 bit code (x64) yielded interesting results:

Using default “Release” build settings (4K blocks)- 64-bit code:

line_by_line: 0.049000 seconds

block_write1: 0.038000 seconds

block_write2: 0.032000 seconds

The ragged block was marginally slower (though perhaps not statistically significant), the fixed block was significantly faster as was the line-by-line write (but not enough to make it faster then any block write).

The test code (4K block version):

#include <stdio.h>

#include <string.h>

#include <time.h>

void line_by_line_write( int count )

{

FILE* f = fopen("line_by_line_write.txt", "w");

for( int i = 0; i < count; i++)

{

fprintf(f, "%d %d %d\n", 1234, 5678, 9012 ) ;

}

fclose(f);

}

#define CHUNK_SIZE (4096)

void block_write1( int count )

{

FILE* f = fopen("block_write1.txt", "w");

char file_buffer[CHUNK_SIZE + 64];

int buffer_count = 0;

int i = 0;

while( i < count )

{

buffer_count += sprintf( &file_buffer[buffer_count], "%d %d %d\n", 1234, 5678, 9012 );

i++;

// if the chunk is big enough, write it.

if( buffer_count >= CHUNK_SIZE )

{

fwrite( file_buffer, buffer_count, 1, f );

buffer_count = 0 ;

}

}

// Write remainder

if( buffer_count > 0 )

{

fwrite( file_buffer, 1, buffer_count, f );

}

fclose(f);

}

void block_write2( int count )

{

FILE* f = fopen("block_write2.txt", "w");

char file_buffer[CHUNK_SIZE + 64];

int buffer_count = 0;

int i = 0;

while( i < count )

{

buffer_count += sprintf( &file_buffer[buffer_count], "%d %d %d\n", 1234, 5678, 9012 );

i++;

// if the chunk is big enough, write it.

if( buffer_count >= CHUNK_SIZE )

{

fwrite( file_buffer, CHUNK_SIZE, 1, f );

buffer_count -= CHUNK_SIZE;

memcpy( file_buffer, &file_buffer[CHUNK_SIZE], buffer_count );

}

}

// Write remainder

if( buffer_count > 0 )

{

fwrite( file_buffer, 1, buffer_count, f );

}

fclose(f);

}

#define LINES 100000

int main( void )

{

clock_t line_by_line_write_minimum = 9999 ;

clock_t block_write1_minimum = 9999 ;

clock_t block_write2_minimum = 9999 ;

for( int i = 0; i < 100; i++ )

{

clock_t start = clock() ;

line_by_line_write( LINES ) ;

clock_t t = clock() - start ;

if( t < line_by_line_write_minimum ) line_by_line_write_minimum = t ;

start = clock() ;

block_write1( LINES ) ;

t = clock() - start ;

if( t < block_write1_minimum ) block_write1_minimum = t ;

start = clock() ;

block_write2( LINES ) ;

t = clock() - start ;

if( t < block_write2_minimum ) block_write2_minimum = t ;

}

printf( "line_by_line: %f seconds\n", (float)(line_by_line_write_minimum) / CLOCKS_PER_SEC ) ;

printf( "block_write1: %f seconds\n", (float)(block_write1_minimum) / CLOCKS_PER_SEC ) ;

printf( "block_write2: %f seconds\n", (float)(block_write2_minimum) / CLOCKS_PER_SEC ) ;

}

11

[ad_2]

solved fastest way to write integer to file in C